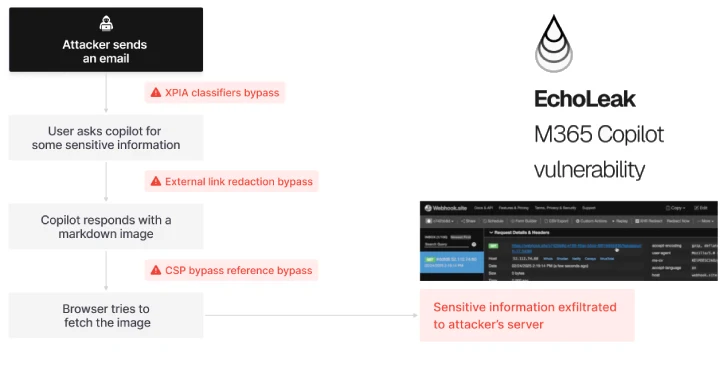

Microsoft 365 Copilot hit by EchoLeak, a critical zero-click AI vulnerability leaking sensitive data. Microsoft rushed a patch to shut it down.

The issue started with EchoLeak exposing internal and user data from Copilot without any clicks or interaction needed. This got flagged as a severe weakness in the AI assistant built into Microsoft 365 apps.

Soon after the leak surfaced, Microsoft pushed updates to stop possible data breaches and warned users to update their deployments. The patch aims to seal the AI pipeline to prevent EchoLeak from siphoning information.

This comes as AI-powered tools inside office suites grow more popular but also amplify risk vectors for users’ private or corporate data. Microsoft says the fix is vetted and should prevent further leaks.

Here’s a key snippet from security researchers who found the flaw:

EchoLeak could silently extract data shared with Copilot by analyzing AI response metadata.

No user action was required for the leak to happen, making it extremely dangerous.

The flaw affected multiple Microsoft 365 apps using Copilot’s backend AI layer.

Microsoft has officially patched the vulnerability as of today.

The patch rollout is underway. Users and admins should apply it ASAP to avoid exposure. Microsoft is monitoring and responding to any new reports tied to EchoLeak.

Image: EchoLeak demo screenshot highlighting data leakage in Microsoft 365 Copilot