SaaStr spills the secret sauce behind its homepage AI’s sharp performance: 18 million words of specialized training plus 60+ days of daily human QA.

That’s right — the AI wasn’t just dumped on a huge dataset. It faced 2 months of relentless, hands-on daily review by SaaStr’s team lead. Every morning, 15-20 minutes of combing through over 100 user questions. Fixing errors. Feeding better answers back into the system.

Early on, the bot was good but made glaring mistakes — like inventing future event dates. The daily QA crushed those hallucinations fast.

This grind mirrors industry heavyweights:

- Harvey teamed with OpenAI, trained on 10 billion tokens of legal data, then had top law firms vet output daily, achieving a 97% lawyer preference rate.

- Palantir deploys Forward Deployed Engineers who embed directly with customers, customizing AI workflows in real time.

- Scale AI’s Data Engine injects enterprise-specific data, with engineers dedicated to tuning models for each client.

- Even OpenAI hires Forward Deployed and solutions engineers to bridge the gap between raw tech and customer needs.

Here’s the takeaway: untrained AIs churn out “consistent mediocrity.” Good enough for a B+ answer, not enough for unicorn moves. Getting great results demands training on the right data — not just any data — with continuous takedown of errors and hallucinations.

Training plus daily, obsessive QA builds the kind of AI that scales from $0 to millions in ARR. Skipping that work means your model ends up just another forgettable chatbot.

SaaStr calls it the “Forward Deployed Engineer reality” — AI doesn’t work out of the box. Enterprises buying AI are “like your grandma getting an iPhone”: they need human setup, training, and tuning.

For SMBs with tiny budgets? The trick is systematizing that expert training once, then applying it broadly. That’s SaaStr AI’s edge after months of manual effort baked in.

The team is now piloting two more AI tools — same drill, every day, no shortcuts.

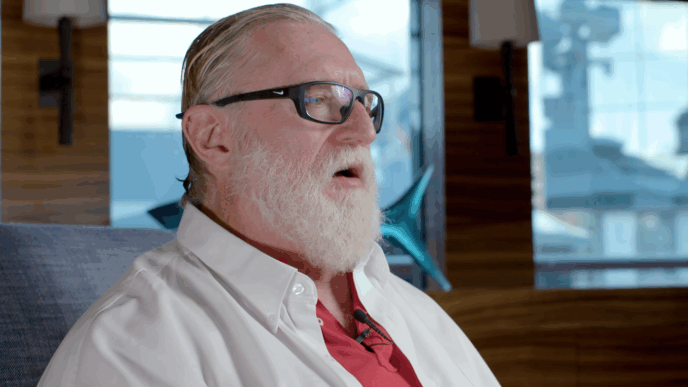

Here’s SaaStr founder Jason Lemkin with the closing on the hard truths behind great AI training:

“Untrained AIs are incredibly good at being consistently mediocre.”

“They’ll give you answers that sound right. That pass the sniff test. That would probably get a B+ in business school.”

“But B+ answers don’t build unicorns.”

“The magic happens when you train your AI on the >right answers. Not the obvious ones. The counterintuitive ones. The ones that only come from years of experience and thousands of conversations with operators.”

“Fine-tuning is a powerful approach in natural language processing (NLP) and generative AI, allowing businesses to tailor pre-trained large language models (LLMs) for specific tasks. This process involves updating the model’s weights to improve its performance on targeted applications.”

“But the key insight from my research? To achieve optimal results, having a clean, high-quality dataset is of paramount importance. A well-curated dataset forms the foundation for successful fine-tuning.”

“That’s what daily QA gives you. Clean, high-quality, curated data about what actually works.”

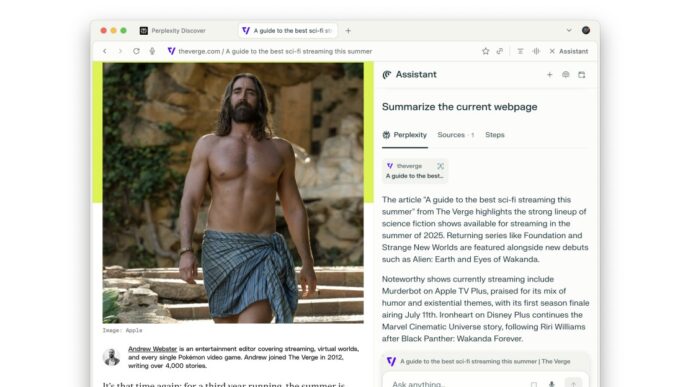

Check how SaaStr did it here and watch their QA process in action: