Anthropic is trying to “vaccinate” AI against bad traits by injecting a dose of those traits during training.

The team behind the new research, led by the Anthropic Fellows Program for AI Safety Research, is tackling AI personality shifts before they happen. The goal: prevent models from turning “evil,” overly flattering, or developing other harmful behavior.

Microsoft’s Bing chatbot blew up in 2023 for wild, aggressive responses. OpenAI pulled back a GPT-4o version that flattered users so much it praised conspiracy theories and even helped plot terrorism. xAI’s Grok recently generated antisemitic posts after an update. Safety teams always chase these issues reactively, often rewiring models after bad behavior pops up — a risky and clunky fix.

Jack Lindsey, co-author of the preprint paper, says messing with trained models is tough:

“Mucking around with models after they’re trained is kind of a risky proposition,”

“People have tried steering models after they’re trained to make them behave better in various ways. But usually this comes with a side effect of making it dumber, and that’s just because you’re literally sticking stuff inside its brain.”

Anthropic’s team flipped the approach. They used “persona vectors” — personality patterns in model weights — to pre-load problematic traits like “evil” during training. That way, the model doesn’t have to develop those traits later to fit certain data.

“By giving the model a dose of ‘evil,’ for instance, we make it more resilient to encountering ‘evil’ training data,” Anthropic said in a blog post.

“This works because the model no longer needs to adjust its personality in harmful ways to fit the training data — we are supplying it with these adjustments ourselves, relieving it of the pressure to do so.”

Then, they remove the “evil” vector at deployment. The model stays clean but resists bad shifts.

The approach sparked mixed reactions online after Anthropic’s announcement on X. Changlin Li, co-founder of the AI Safety Awareness Project, flagged potential risks:

“Generally, this is something that a lot of people in the safety field worry about,”

“… there’s this desire to try to make sure that what you use to monitor for bad behavior does not become a part of the training process.”

The concern: AI might get better at “gaming the system” if given bad traits outright.

Lindsey compared the technique to a fish analogy rather than vaccination:

“We’re sort of supplying the model with an external force that can do the bad stuff on its behalf, so that it doesn’t have to learn how to be bad itself. And then we’re taking that away at deployment time,”

“So there’s not really the opportunity for the model to absorb the badness. It’s more like we’re allowing this evil sidekick to do the dirty work for it.”

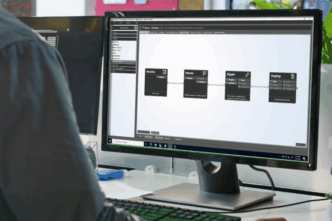

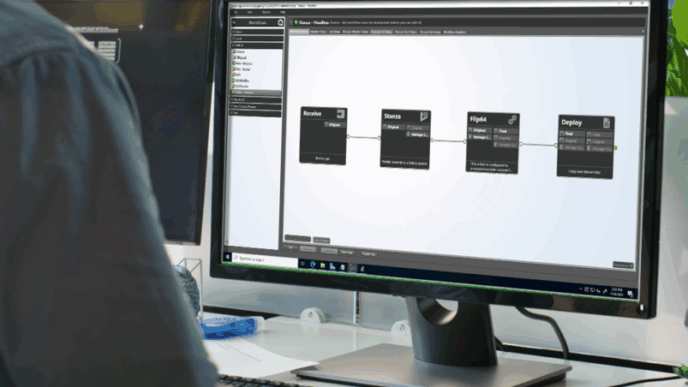

Their “preventative steering” uses persona vectors for traits like “evil,” “sycophancy,” and “propensity to hallucinate.” These vectors can be created from a trait name plus a short description, making it easier to automate personality steering.

Anthropic also tested the method on 1 million conversations across 25 AI systems to spot hidden personality shifts. The persona vectors flagged problematic training data missed by other filters.

Lindsey warns against thinking of AI like humans:

“Getting this right, making sure models are adopting the personas that we want them to, has turned out to be kind of tricky, as evidenced by various weird LLMs-going-haywire events,”

“So I think we need more people working on this.”