Microsoft just launched OpenAI’s first open-weight models since GPT-2: gpt-oss-120b and gpt-oss-20b. These models run locally or in the cloud with no compromises.

You can fire up the massive gpt-oss-120b on a single enterprise GPU. It’s built for tough reasoning, handling math, code, and specialized Q&A fast and efficiently.

For lighter local use, gpt-oss-20b runs on Windows machines with 16GB+ VRAM and soon on MacOS. It’s designed for agentic tasks like code execution and tool use, perfect for edge devices or offline workflows.

This is open AI weight access at its best. You get the freedom to fine-tune, distill, compress, and deploy however you want using Azure AI Foundry or Foundry Local.

With open weights teams can fine-tune using parameter-efficient methods (LoRA, QLoRA, PEFT), splice in proprietary data, and ship new checkpoints in hours—not weeks.

You can distill or quantize models, trim context length, or apply structured sparsity to hit strict memory envelopes for edge GPUs and even high-end laptops.

Full weight access also means you can inspect attention patterns for security audits, inject domain adapters, retrain specific layers, or export to ONNX/Triton for containerized inference on Azure Kubernetes Service (AKS) or Foundry Local.

Both models will soon support the standard responses API for easy swapping into existing apps.

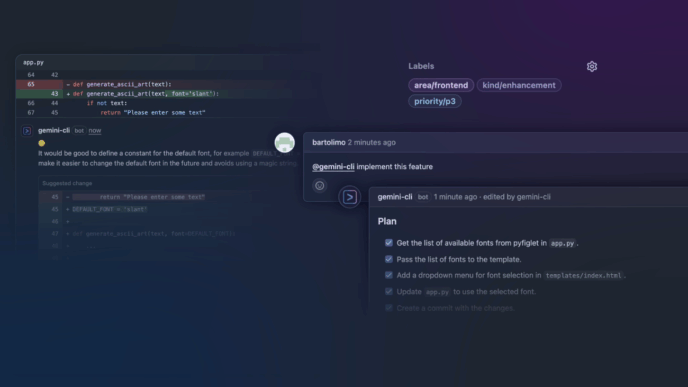

Azure AI Foundry isn’t just a model catalog — it’s a full platform. Spin up endpoints with a few commands, fine-tune on your data, and mix open-source with proprietary models.

For local use, Foundry Local brings open models to Windows AI Foundry, optimized for CPUs, GPUs, and NPUs with simple CLI, API, and SDK access. Go fully cloud-optional if you want.

This is hybrid AI in action: the ability to mix and match models, optimize performance and cost, and meet your data where it lives.

Pricing is live as of August 2025. Get started by browsing Azure AI Model Catalog or follow the quickstart for Foundry Local on Windows.

Microsoft’s doubled down on open AI this year, including open-sourcing GitHub Copilot Chat for VS Code. gpt-oss marks another big step granting developers full control without black boxes or hidden shortcuts. The stack is now AI, and it’s yours to run your way.