OpenAI just launched two open-weight AI reasoning models, gpt-oss-120b and gpt-oss-20b. Both are free to download on Hugging Face and compete with the company’s o-series models.

The gpt-oss-120b runs on a single Nvidia GPU, while the smaller gpt-oss-20b fits on a consumer laptop with just 16GB RAM.

This is OpenAI’s first open model release since GPT-2, five years ago. The new models can send complex queries to OpenAI’s closed models in the cloud when needed, like for image processing.

OpenAI has mostly kept its tech proprietary to build its API business. But CEO Sam Altman admitted in January the company was “on the wrong side of history” regarding open sourcing.

The move aims to counter Chinese AI labs such as DeepSeek, Alibaba’s Qwen, and Moonshot AI that have dominated open source lately. The U.S. government also pushed open sourcing earlier this year.

Altman said:

“Going back to when we started in 2015, OpenAI’s mission is to ensure AGI that benefits all of humanity.”

“To that end, we are excited for the world to be building on an open AI stack created in the United States, based on democratic values, available for free to all and for wide benefit.”

Performance vs. rivals

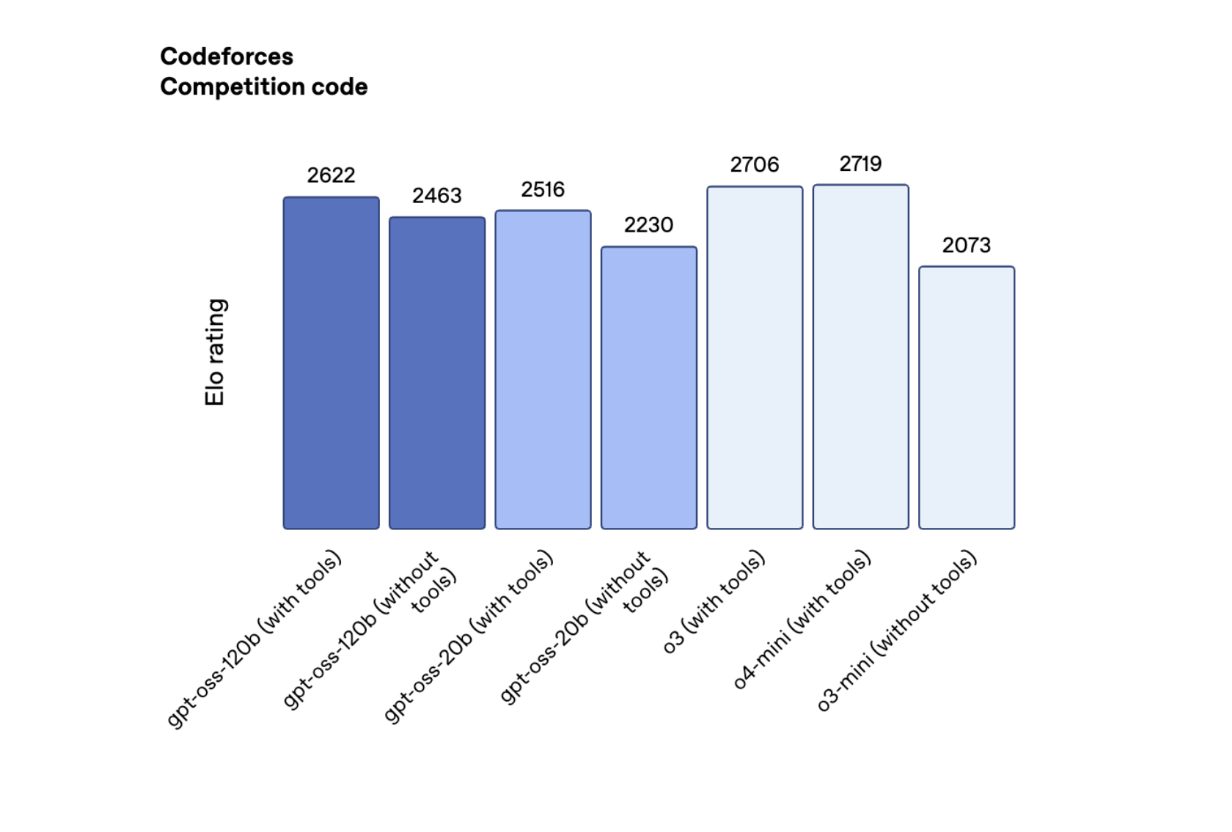

OpenAI claims state-of-the-art results for open models. On Codeforces, gpt-oss-120b scored 2622 and gpt-oss-20b 2516, beating DeepSeek’s R1 but lagging behind the o3 and o4-mini models.

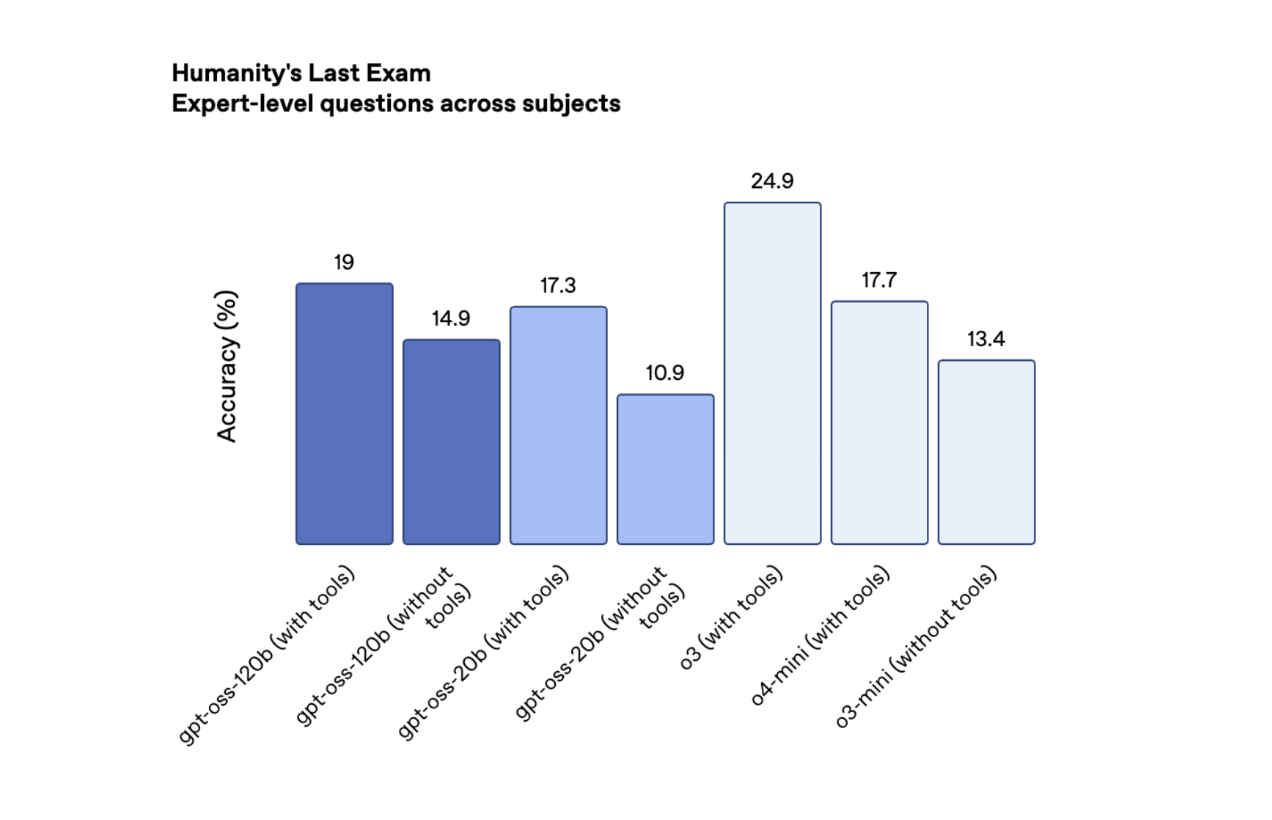

On Humanity’s Last Exam (HLE), they scored 19% and 17.3%, underperforming o3 but ahead of DeepSeek and Qwen’s top open models.

Hallucinations are a big problem. The open models hallucinate on 49% (120b) and 53% (20b) of PersonQA questions—triple the older o1 model’s 16%, and worse than o4-mini’s 36%.

OpenAI admits smaller models have less world knowledge and hallucinate more.

How these models were trained

OpenAI trained the new models like its closed ones, using mixture-of-experts (MoE) to run efficiently. The 117-billion parameter gpt-oss-120b activates only 5.1 billion per token.

High-compute reinforcement learning taught these models right from wrong. They use chain-of-thought reasoning and can call tools like web search and Python code in their processes.

But they are limited to text only. No images or audio like OpenAI’s other models.

OpenAI released both models under the Apache 2.0 license—free for enterprises to use and monetize with no permission needed.

However, OpenAI won’t release the training data. Ongoing lawsuits around copyright issues make this a sensitive area.

Safety checks delayed release

OpenAI postponed the launch several times to study safety risks. They checked if bad actors could fine-tune gpt-oss models for cyberattacks or bioweapon creation.

OpenAI found slight biological threats but no “high capability” danger after fine-tuning.

Developers are eager but watching. DeepSeek’s R2 and Meta’s new superintelligence lab promise strong open models soon.

OpenAI is back in the open game. The question: can it keep up this time?