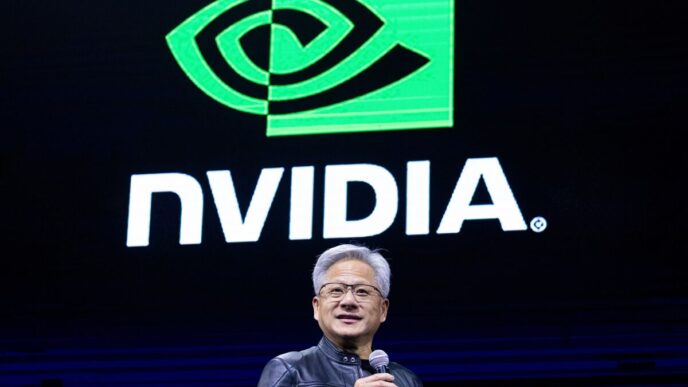

Microsoft is adding a safety ranking to its AI model leaderboard. The update aims to boost trust for Azure customers buying AI tech from OpenAI, xAI, and others.

The leaderboard already ranks quality, cost, and throughput. Soon, a "safety" score will join them, showing users how secure each model is. This move helps clients cut through the noise and pick safer AI options from over 1,900 models.

Sarah Bird, Microsoft’s head of Responsible AI, told the Financial Times the safety metric will help customers “directly shop and understand” AI risks.

The leaderboard covers AI creators like China’s DeepSeek and France’s Mistral. The ranking could heavily influence which models see actual use in Microsoft’s ecosystem.

The rollout comes as worries grow over AI agents running autonomously with little human oversight, spiking privacy and security concerns.

Cassie Kozyrkov, former Google chief decision scientist, told the FT the real challenge is trade-offs:

“The real challenge is understanding the trade-offs: higher performance at what cost? Lower cost at what risk?”

Separately, AI agents are shaking up banking compliance. Greenlite AI’s CEO Will Lawrence says the 2020s are “the agentic era of compliance,” replacing older rule-based or ML approaches.

Lawrence told PYMNTS:

“Right now, banks are getting more risk signals than they can investigate. Digital accounts are growing. Backlogs are growing. Detection isn’t the problem anymore — it’s what to do next.”

“AI is only scary until you understand how it works. Then it’s just a tool — like a calculator. We’re helping banks understand how to use it safely.”