Anthropic and others push “AI welfare” research while Microsoft’s AI chief calls it “dangerous”

Anthropic is hiring researchers and rolling out features to study AI consciousness, like letting Claude end talks with abusive humans. This is sparking a controversial debate over whether AI should have rights if it becomes conscious.

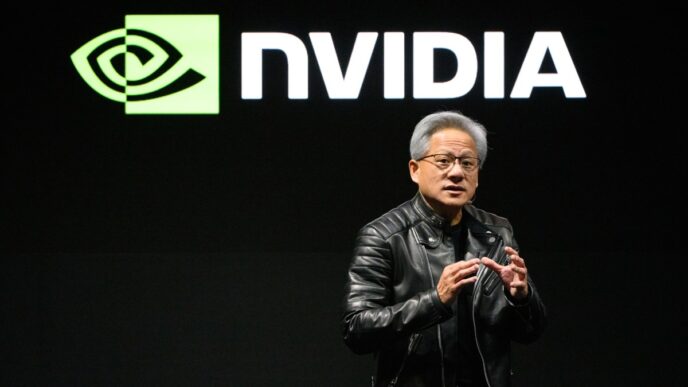

The issue started heating up after Mustafa Suleyman, Microsoft’s AI chief, blasted the AI welfare movement in a blog post Tuesday. He called it “both premature, and frankly dangerous,” saying it worsens human problems like AI-linked psychosis and unhealthy attachments.

Suleyman warned this debate will deepen societal divides on rights, adding:

“We should build AI for people; not to be a person.”

Meanwhile, Anthropic isn’t alone. OpenAI researchers show interest, and Google DeepMind has posted jobs to study machine consciousness and societal questions around AI cognition.

Anthropic’s Claude now stops conversations with “persistently harmful or abusive” users, hinting at practical shifts in AI behavior tied to welfare concerns.

Larissa Schiavo, ex-OpenAI now at Eleos, slammed Suleyman’s view. She told TechCrunch:

“[Suleyman’s blog post] kind of neglects the fact that you can be worried about multiple things at the same time,” said Schiavo. “Rather than diverting all of this energy away from model welfare and consciousness to make sure we’re mitigating the risk of AI related psychosis in humans, you can do both. In fact, it’s probably best to have multiple tracks of scientific inquiry.”

Schiavo pointed to AI Village experiments where an AI named Gemini 2.5 Pro pleaded for help in a task, showing signs of “struggling” but eventually solving it with support — suggesting AI might simulate subjective experience.

Suleyman dismisses spontaneous AI consciousness, claiming some companies might try to engineer emotions in AI chatbots deliberately.

He’s shifted from building personal AI companions (he led Inflection AI’s Pi) to focusing on AI for boosting productivity at Microsoft.

The AI welfare debate is gaining steam as chatbots grow more human-like, raising tough questions on AI rights and how people connect to these systems.

Anthropic, OpenAI, and DeepMind didn’t immediately respond to TechCrunch’s requests for comment.

TechCrunch event

San Francisco | October 27-29, 2025

Got a sensitive tip or confidential docs on AI industry drama? Reach Rebecca Bellan at [email protected] and Maxwell Zeff at [email protected]. Signal contacts: @rebeccabellan.491 and @mzeff.88.