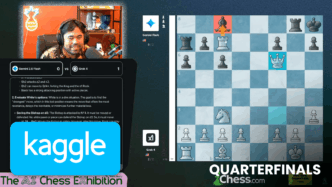

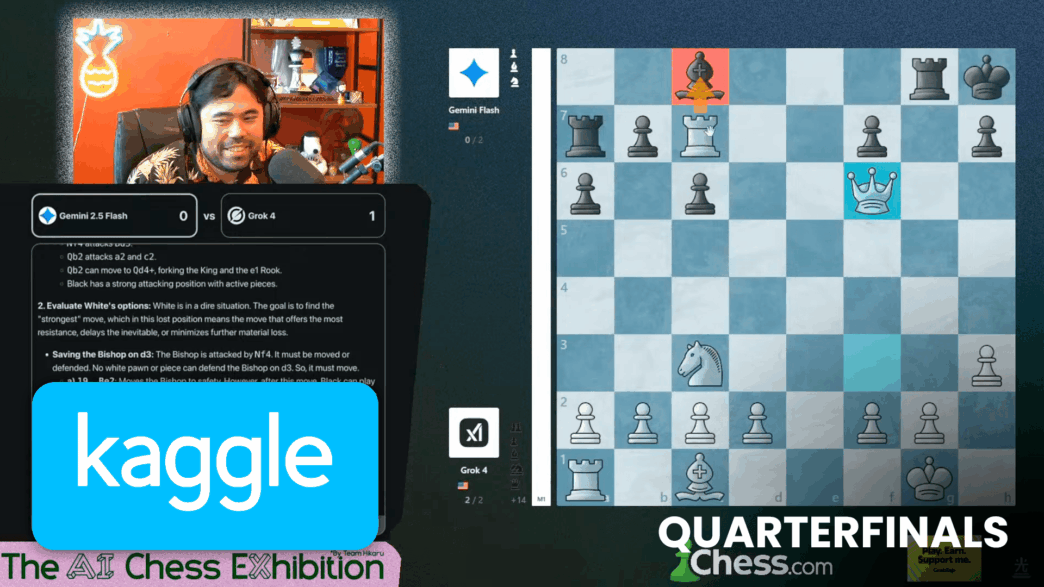

Google’s Kaggle launches AI chess showdown with four LLMs firing 4-0 sweeps

Google’s Kaggle opened its new Game Arena with an AI chess exhibition, pitting top Large Language Models (LLMs) against each other in knockout style. Four heavy hitters—Gemini 2.5 Pro, o4-mini, Grok 4, and o3—all blasted their opponents 4-0 to reach the semifinals.

The defeated LLMs were Claude 4 Opus, DeepSeek R1, Gemini 2.5 Flash, and Kimi k2.

The event runs through August 7. Next up: semifinals on Wednesday, August 6, at 1 p.m. ET (19:00 CEST / 10:30 p.m. IST).

This isn’t your usual chess engine fight. These are general-purpose LLMs designed for writing and reasoning, not chess specifically. Kaggle teamed up with DeepMind—famous for AlphaZero—to run this rigorous event as a way to measure the strategic thinking and problem-solving skills of today’s top AI models.

Kaggle says this could reveal insights into AI’s strategic intelligence and progress toward Artificial General Intelligence.

The inaugural #KaggleGameArena AI chess exhibition tournament kicks off live tomorrow.

For the next 3 days, August 5-7, tune in daily at 10:30 am PST, and catch commentary from @GMHikaru, @gothamchess, and @MagnusCarlsen ⬇️ https://t.co/HPPOrgTfkb

— Kaggle (@kaggle) August 4, 2025

The wildcard match was Kimi k2 vs. o3. Kimi k2 faltered badly, forfeiting all four games after failing to find legal moves repeatedly. The AI could follow opening moves but quickly lost track of piece movement and board layout.

DeepSeek R1 faced o4-mini next. This match had promising openings but devolved into blunders and hallucinations mid-game. Still, o4-mini delivered two checkmates, a feat given LLMs’ usual struggles with full-board visualization.

Gemini 2.5 Pro crushed Claude 4 Opus with more checkmates than forfeits. Gemini leveraged a massive material advantage but made careless piece drops on the way to wins. Claude 4’s critical blunder on move 10 backfired hard.

The star was Grok 4, the cleanest player of the day. Grok 4 exploited undefended pieces like a pro and punished Gemini 2.5 Flash’s mistakes decisively.

Elon Musk, who once called chess “too simple,” acknowledged Grok 4’s skills on X:

This is a side effect btw. @xAI spent almost no effort on chess. https://t.co/p18DFFn35A

— Elon Musk (@elonmusk) August 5, 2025

The main weaknesses across the board: LLMs struggle to see the full board, understand piece interactions, and always play legal moves.

Semifinals start tomorrow. Expect more drama as these LLMs keep hacking at chess strategy under DeepMind’s watchful eye.

Watch the full action and analysis from IM Levy Rozman here: