AI scribes are easing doctors’ workloads across Australian hospitals and GP clinics — but experts warn of risks.

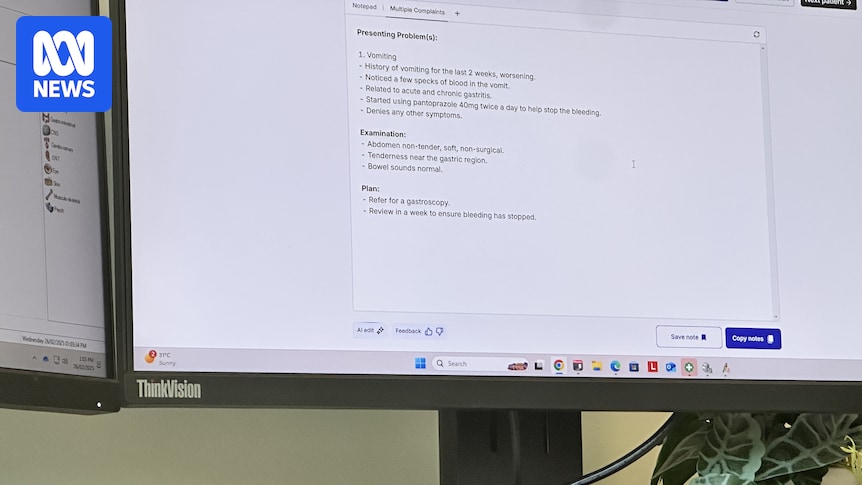

Queensland Health is piloting AI scribes at three hospitals and health services. The tool listens to doctor-patient chats in real time, transcribes them, then drafts medical notes for doctors to approve.

Dr Ben Condon, who experienced the tech firsthand in a rural ER, said it’s a massive time-saver.

"I would easily say [it can save] hours in a shift," Dr Condon said.

"It’s really tangible … in a 15-minute consultation, you’re typically writing notes for 8-10 minutes, this cuts it down to a minute or less.

Amplified across a shift it’s pretty significant."

The rollout supports Queensland’s integrated electronic medical record (ieMR), now active at 80 sites. AMA president Dr Nick Yim called the move “revolutionising” doctor workflows.

But risks lurk. Associate Professor Saeed Akhlaghpour from the University of Queensland flagged problems with accuracy, privacy, and legal liability.

"There are immediate benefits [but] that said, the risks are real and I have several major concerns — they’re shared by many clinicians, legal experts and privacy regulators," he said.

"These tools can make mistakes, especially with strong accents, noisy rooms or medical jargon.

AI scribes can misinterpret or omit clinically important information, such as confusing drug names, misclassifying symptoms, or even hallucinating content."

Hallucination rates run from 0.8% to nearly 4%, per a Royal Australian College of General Practitioners review.

Akhlaghpour notes AI scribes aren’t regulated by the Therapeutic Goods Administration, meaning doctors hold legal responsibility for errors.

Data safety is another major worry. With healthcare records fetching 20-100 times more than credit cards on the dark web, breaches have huge consequences.

"Hospitals and clinics need good internal systems: proper training, IT security, multi-factor authentication, and up-to-date privacy notices," Akhlaghpour said.

"Choosing a reputable vendor matters too — one that stores data locally, uses encryption, and doesn’t reuse patient data for AI training without consent."

He says no AI scribe-specific breaches have yet been reported in Australia, but the healthcare sector has serious data breach history.

Patients should ask before consenting:

- Will the consultation be recorded or transcribed?

- Who handles the data, and where is it stored?

- Will info be used to train AI or shared with others?

- Can I refuse and still get care?

AI scribes are arriving fast, saving time and easing burnout. But accuracy, privacy, and legal headaches aren’t going away anytime soon.