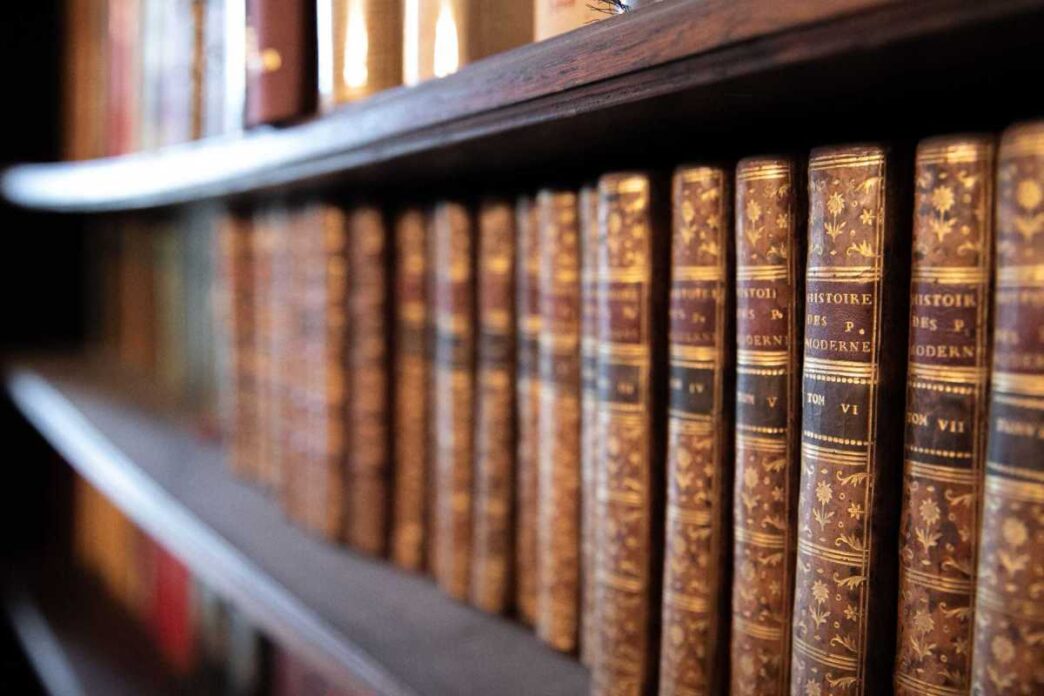

Anthropic wins a key court ruling allowing AI training on published books without authors’ OK.

Federal judge William Alsup ruled it’s legal for AI companies to train models on copyrighted books under fair use. This is the first court nod to tech firms claiming fair use covers their AI training.

The ruling hits authors, artists, and publishers who have filed dozens of lawsuits against OpenAI, Meta, Midjourney, Google, and others. It doesn’t guarantee other judges will agree, but it sets a strong early precedent favoring AI companies over creatives.

Fair use is a tricky part of copyright law, dealing with whether use is commercial, transformative, or for education or parody. The law hasn’t been updated since 1976 — well before AI training sets were a thing.

Meta and others have also leaned on fair use for training defenses. Before this decision, the outcomes were unclear.

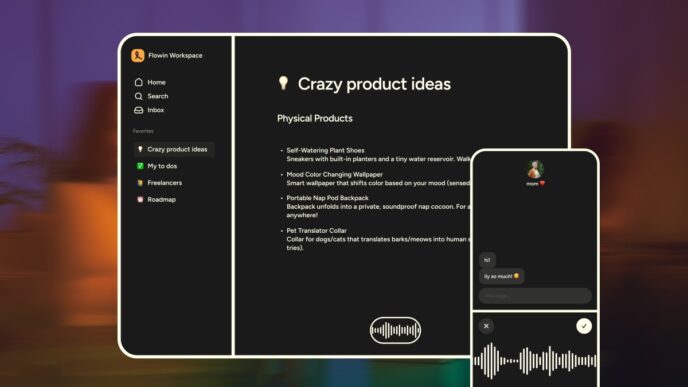

The case, Bartz v. Anthropic, also focuses on how Anthropic got the works. Plaintiffs claim Anthropic downloaded millions of books from pirate sites to build a “central library” kept “forever.” That part is clearly illegal.

Judge Alsup agreed training the books was fair use but ruled there will be a separate trial on the pirated copies issue.

Judge Alsup wrote:

“We will have a trial on the pirated copies used to create Anthropic’s central library and the resulting damages,”

“That Anthropic later bought a copy of a book it earlier stole off the internet will not absolve it of liability for theft but it may affect the extent of statutory damages.”

The damage trial could complicate the fallout for Anthropic, but the fair use decision marks a big win for AI training methods.