DeepSeek is facing scrutiny over its newly launched R1 reasoning AI model. Released last week, R1-0528 excels at math and coding benchmarks but has raised concerns about its training data.

The company remains tight-lipped about the dataset but speculation is rife. Developer Sam Paeach claims to have evidence suggesting that the model was trained, at least in part, on outputs from Google’s Gemini family of AIs. In a post on X, he noted,

"If you’re wondering why new deepseek r1 sounds a bit different, I think they probably switched from training on synthetic openai to synthetic gemini outputs."

This isn’t the first time DeepSeek has been accused of tapping rival models. Back in December, the company’s V3 model identified itself as ChatGPT, hinting at potential training on OpenAI chat logs.

OpenAI previously indicated to the Financial Times that it found evidence linking DeepSeek to distillation—a method that extracts data from superior models. Microsoft also noted suspicious activities involving DeepSeek-related accounts, hinting at data exfiltration.

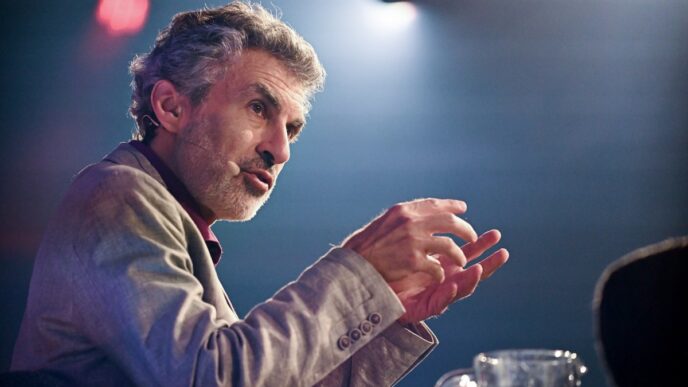

"They’re short on GPUs and flush with cash. It’s literally effectively more compute for them," AI researcher Nathan Lambert commented on X, backing the theory of Gemini training.

In response to concerns about data misuse, AI companies are tightening security. OpenAI has required ID verification for API access since April, excluding China. Google is also taking action, summarizing traces in its AI Studio platform to hinder rival model training.

The situation is fluid. We’ll update as more information surfaces.