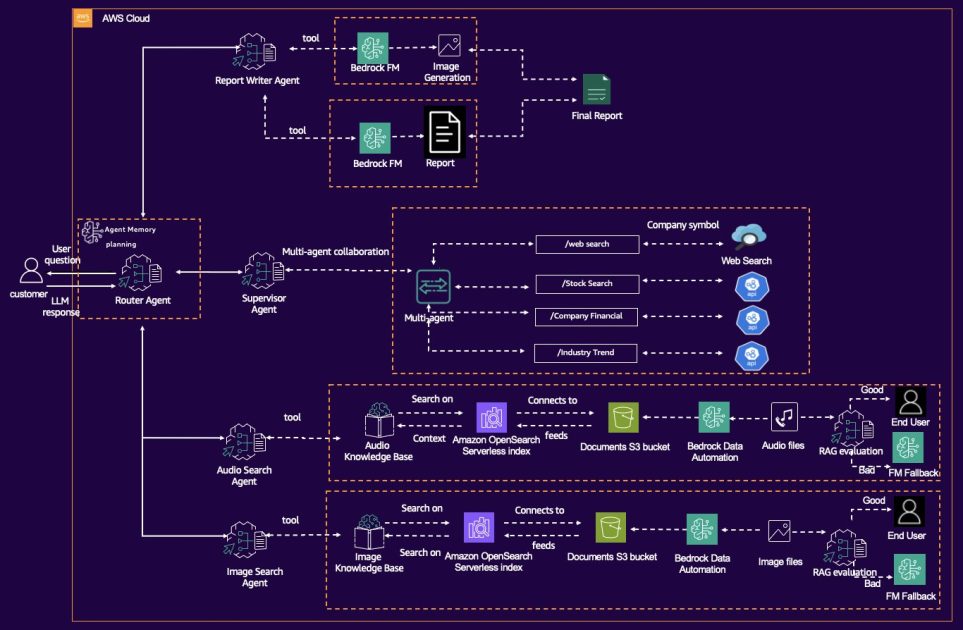

Amazon launched a new multimodal AI assistant framework powered by Amazon Nova Pro and Amazon Bedrock. It can process text, images, audio, and video for complex enterprise tasks.

This AI assistant parses earnings call transcripts, presentation slides, and CEO audio remarks—all in one. It uses an agentic workflow pattern with reasoning, tool-calling, observation, and looped decision-making. That means it actively plans and retrieves info instead of just responding to prompts.

Amazon Nova acts as the AI brain. It handles text and images with a massive 300,000-token context window, letting it manage long documents and conversations. Amazon Bedrock Data Automation extracts and indexes multimodal data like PDFs, audio, and slides. The system converts slide decks into searchable images and uses semantic search to pull relevant info from OpenSearch.

LangGraph orchestrates the workflow, turning agent decisions into a stable, manageable directed graph of actions and checks. This keeps the AI both autonomous and predictable.

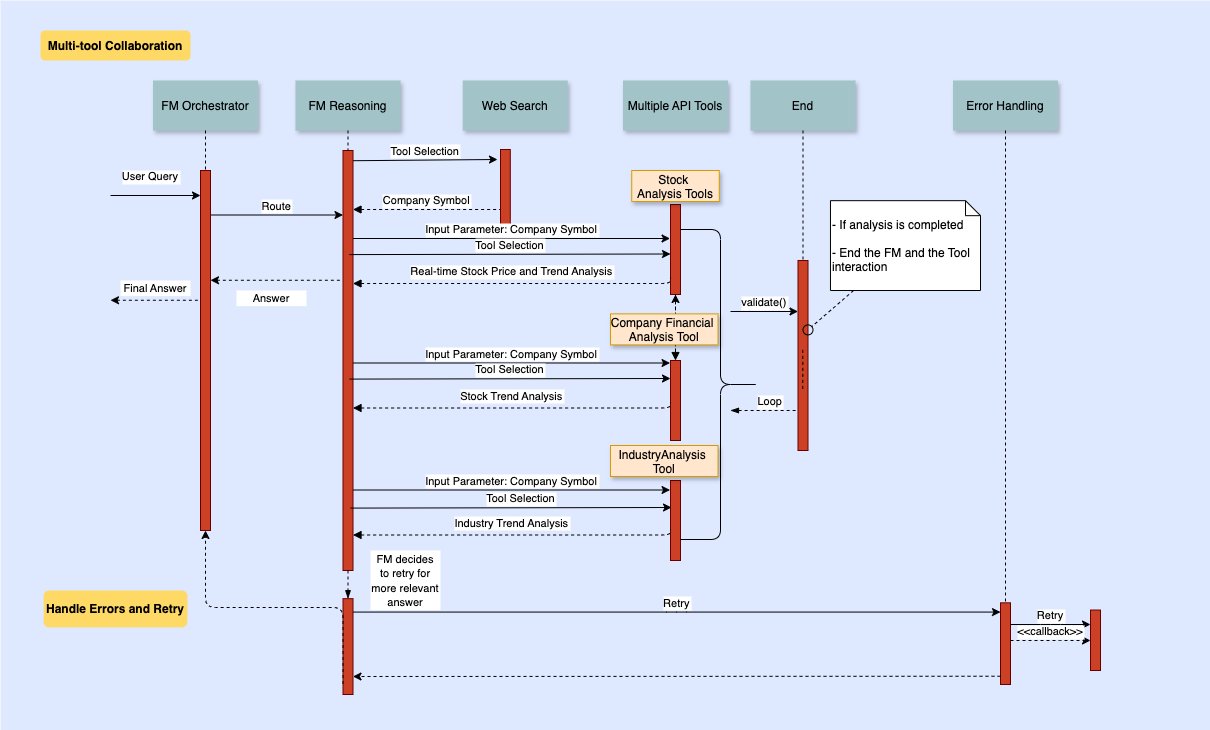

The setup supports multi-tool collaboration. Different agents fetch stock data, industry benchmarks, or web info. Nova merges results into a reliable answer. There’s a hallucination check step using external models like Claude or Llama to vet responses against trusted data.

An example use case: A financial AI assistant answers questions like “What’s XXX’s stock performance vs rideshare peers this year?” It identifies missing data, calls APIs for market metrics, validates info, then summarizes the results in an investor report format.

AWS built this for scale. Bedrock offers foundations models with managed infrastructure, so no GPU headaches. Data Automation handles complex multimodal ingestion out of the box. The whole system plugs into AWS security, monitoring, and scaling.

This architecture suits finance, healthcare, and manufacturing—anywhere multimodal data meets complex queries. It can unify charts, audio, documents, sensor logs, and images into actionable insights.

The project is open source on GitHub with notebooks showing the system in action. Developers can customize for their needs, swapping models and tools as required.

AWS positions this as the future for multimodal generative AI in enterprises—going beyond siloed models to dynamic, agentic AI assistants that reason, plan, and act like expert analysts at machine speed.