Apple researchers just dropped a paper taking aim at the “reasoning” hype around today’s top large language models. The team slammed the likes of OpenAI’s GPT-4, Anthropic’s Claude 3.7, and Google’s Gemini, calling their “reasoning” capabilities an "illusion of thinking."

The study questions claims that these advanced AIs can truly reason. Instead, Apple scientists found the models hit a hard limit with complex problems — what they call an “overthinking” phenomenon that makes accuracy collapse even when there’s enough data and compute power.

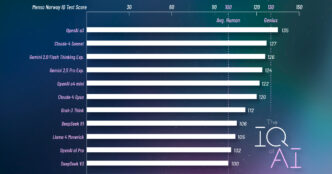

Apple’s lead AI researcher Samy Bengio and team used “controllable puzzle environments” to test the models. The results? The latest generation of reasoning AIs often fail exact computation, can’t reliably use explicit algorithms, and reason inconsistently.

"While these models demonstrate improved performance on reasoning benchmarks, their fundamental capabilities, scaling properties, and limitations remain insufficiently understood," the paper states.

"Through extensive experimentation across diverse puzzles, we show that frontier [large reasoning models] face a complete accuracy collapse beyond certain complexities."

"They fail to use explicit algorithms and reason inconsistently across puzzles."

The paper calls out benchmarking flaws too, blaming “data contamination” for inflated performance scores and offering little insight into how reasoning actually works.

This is a blow to a heavily hyped AI frontier just as Apple gears up to roll out its own AI tools in iPhones and Macs. It also raises hard questions about the billions pouring into AI research at OpenAI, Google, and Meta.

For Apple, the move might be a hedge or critique as it trails competitors in the AI race. Or could it be a warning shot that we’ve hit a real wall in AI reasoning, despite all the fanfare?

Either way, Apple’s paper cuts through the hype with blunt skepticism on current “reasoning” AI models. The tech might be smarter than ever — but it’s still not really thinking.

Read the full Apple paper here.