AMD launched its MI350 AI chips and revealed details on its next-gen MI400 GPUs at its Advancing AI event in San Jose on Thursday.

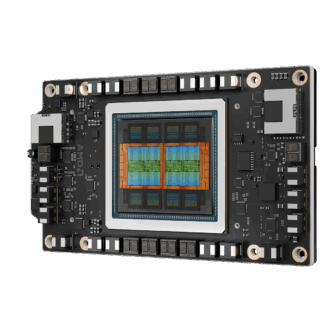

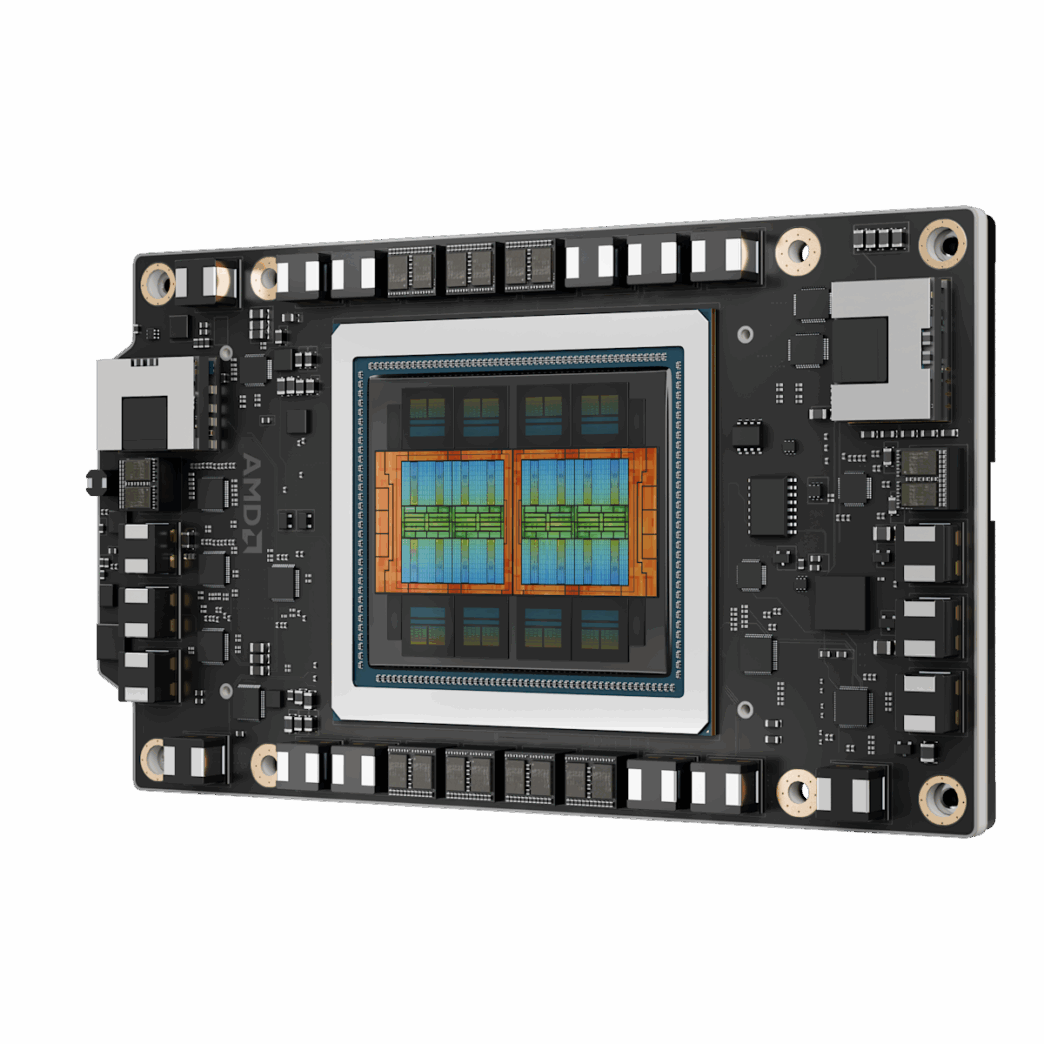

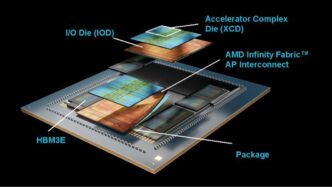

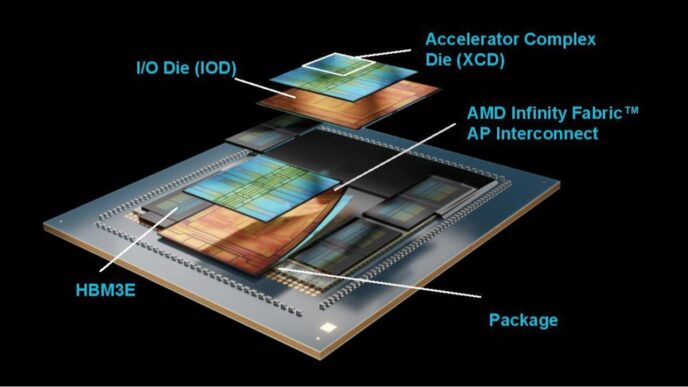

The new MI350 line includes the MI350X and MI355X models. AMD says these deliver up to 4x the AI compute power and a 35x boost in inferencing compared to their last-gen MI300 chips.

Each MI350 chip packs 288GB of HBM3E memory, beating Nvidia’s Blackwell GPUs at 192GB. But Nvidia’s GB200 superchip pairs two Blackwell GPUs, totaling 384GB.

AMD offers either single MI350X/MI355X chips or platforms with 8 GPUs each—up to 2.3TB of memory. Systems with up to 64 GPUs can use air cooling; 128-GPU setups require liquid cooling.

The upcoming MI400 GPUs, launching in 2026, will have up to 432GB of HBM4 and memory speeds topping 19.6TB/s. They’ll compete with Nvidia’s Blackwell Ultra GB300 and upcoming Rubin AI GPUs.

AMD also rolled out the AMD Developer Cloud. This service gives developers cloud access to MI300 and MI350 GPUs for AI training and inferencing without hardware purchases.

Nvidia launched a similar cloud service, DGX Cloud Lepton, last month.

AMD shares have dropped 24% in the past year, while Nvidia’s stock rose 19%. Year to date, AMD is flat (-0.2%) and Nvidia is up 7%.

AMD expects an $800 million hit from U.S. export controls restricting AI chip sales to China. Nvidia took a $4.5 billion write-down and expects to miss $8 billion in Q2 sales due to the same sanctions.