Cornelis Networks just launched a new AI-focused networking fabric, the CN500. It supports up to 500,000 processors with zero added latency — a massive leap over current systems.

This isn’t just a tweak on Ethernet or InfiniBand. Cornelis is pitching a third pillar in networking tech specifically built for scaling massive AI and HPC workloads. Their CN500 outperforms InfiniBand NDR by handling twice the message rate with 35% less delay. For AI, communications run six times faster than Ethernet.

The core is Cornelis’s dynamic adaptive routing that dodges network congestion in real-time. It reroutes traffic to avoid hotspots—think of it as highway on-ramp traffic control, slowing senders down when switches spot a jam.

Philip Murphy, co-founder and COO at Cornelis Networks, explained:

“If there’s an event at a stadium that we all want to go to, you don’t want the traffic that’s going past the stadium to get caught there too.”

“Think of mitigating traffic as it comes onto a highway on-ramp.”

CN500 uses credit-based flow control to avoid traditional Ethernet’s bulky memory buffers. It pre-allocates memory, so senders always know how much data they can push without waiting for acknowledgments.

The network is built for resilience too. If a GPU or link fails, the whole system keeps running at slightly reduced bandwidth—no need for slow restarts or frequent checkpoints.

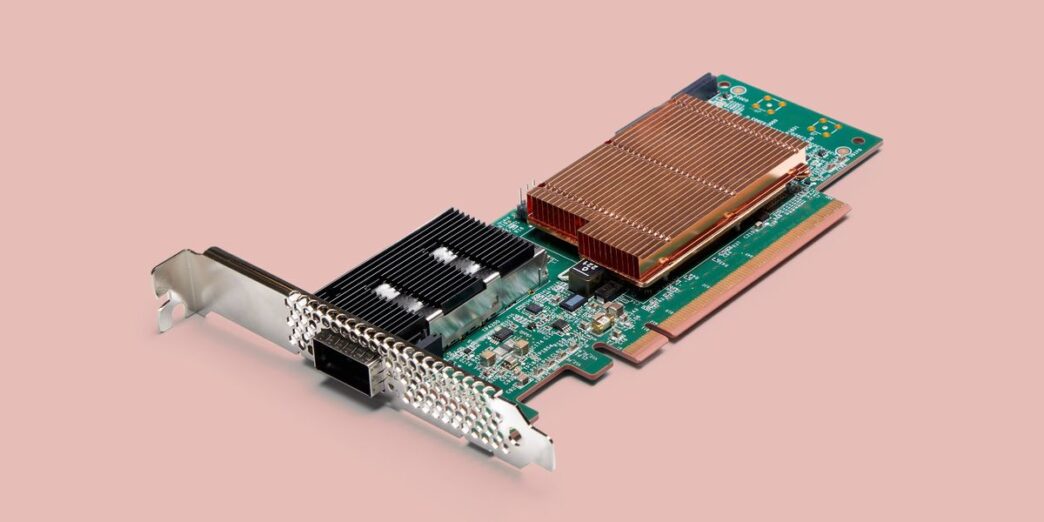

Physically, the CN5000 is a custom network card installed like an Ethernet adapter on servers. It plugs into top-of-rack switches and director-class switches, enabling giant clusters with thousands of endpoints.

Cornelis targets clients upgrading clusters for AI and advanced simulations. They work with OEM partners who assemble and ship full solutions with Cornelis cards inside.

Murphy pushed the urgency of efficient AI infrastructure, adding:

“If you don’t adopt AI, you’re going out of business. If you use AI inefficiently, you’ll still go out of business.”

“Our customers want to adopt AI in the most efficient way possible.”