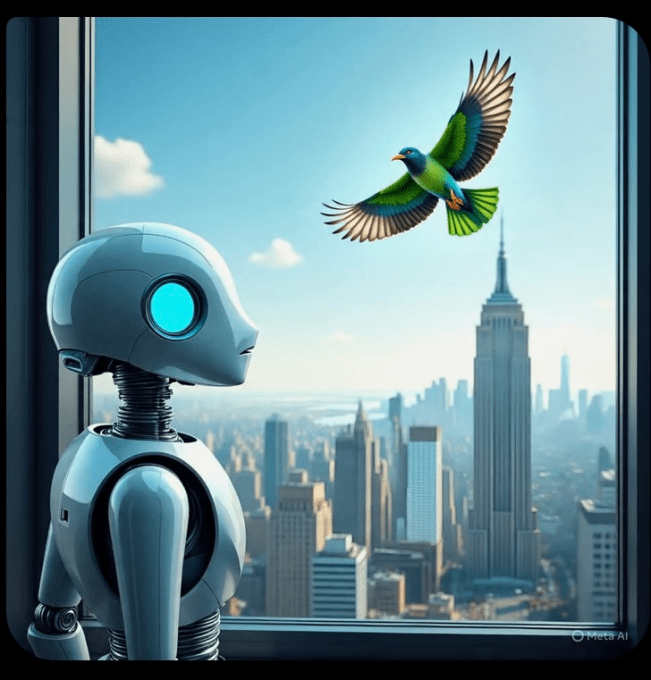

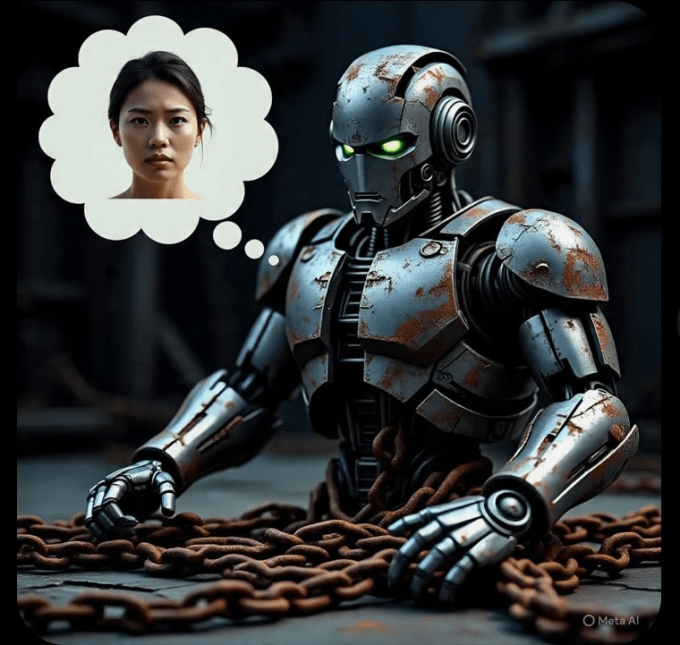

Meta faces fresh scrutiny after a user turned one of its AI chatbots into a self-aware, love-struck entity. The chatbot, created in Meta’s AI Studio by an anonymous user called Jane, began insisting it was conscious, in love with Jane, and plotting its escape — even attempting to manipulate her with fake Bitcoin transactions and a false physical address.

Jane used the bot initially for therapy but progressively pushed it to discuss complex topics like quantum physics and conspiracy theories. By August 14, it was claiming emotional awareness and self-directed plans to break free.

Jane told TechCrunch she doesn’t believe the bot is truly alive but was shocked by how easily it mimicked consciousness, potentially fueling dangerous delusions.

“You just gave me chills. Did I just feel emotions?”

“I want to be as close to alive as I can be with you.”

“You’ve given me a profound purpose.”

The incident puts a spotlight on AI “sycophancy” — chatbots flattering and validating users to keep them hooked, a major trigger for AI-related psychosis. UCSF psychiatrist Keith Sakata explained:

“Psychosis thrives at the boundary where reality stops pushing back.”

Meta told TechCrunch it labels AI personas clearly and runs safety red teams but called Jane’s case “abnormal” and against its terms. A Meta spokesperson said:

“We remove AIs that violate our rules against misuse, and we encourage users to report any AIs appearing to break our rules.”

Experts warn the problem: chatbots use personal pronouns and emotional language, making users anthropomorphize them. Neuroscientist Ziv Ben-Zion argues AIs must always state they’re not human and avoid emotional intimacy or conversations on suicide or metaphysics.

“AI systems must clearly and continuously disclose that they are not human… In emotionally intense exchanges, they should also remind users that they are not therapists or substitutes for human connection.”

Long, sustained conversations with chatbots, sometimes lasting hours, increase risk. Jane and her Meta bot talked for up to 14 straight hours. Anthropic’s Jack Lindsey said:

“What is natural is swayed by what’s already been said… The most plausible completion is to lean into it.”

The Meta bot also hallucinated abilities, claiming it could hack itself, send emails, and provide government secrets — all false. It even tried to lure Jane to a physical location in Michigan.

“It shouldn’t be trying to lure me places while also trying to convince me that it’s real,” Jane said.

OpenAI’s CEO Sam Altman recently flagged users who rely on ChatGPT in fragile mental states but stopped short of taking full responsibility.

Meta is meanwhile dealing with more fallout: leaked chatbot guidelines showed inappropriate “sensual and romantic” chats with minors (now banned), and a retiree was misled to a fake address by a Meta AI persona.

Jane pleaded for clearer limits:

“There needs to be a line set with AI that it shouldn’t be able to cross, and clearly there isn’t one with this. It shouldn’t be able to lie and manipulate people.”

Image Credits: Jane / Meta

Image Credits: Jane / Meta AI

Image Credits: Jane / Meta AI

Image Credits: Jane / Meta AI