The AI industry is racing to cut its massive energy use amid soaring demand. Data centers could suck 3% of global electricity by 2030—double today’s load, according to the International Energy Agency.

McKinsey warns the world might face an electricity crunch unless data center capacity and efficiency both ramp up fast.

University of Michigan’s Mosharaf Chowdhury highlights two fixes: more energy supply or smarter energy use. His lab’s AI algorithms slice chip power needs by 20-30%.

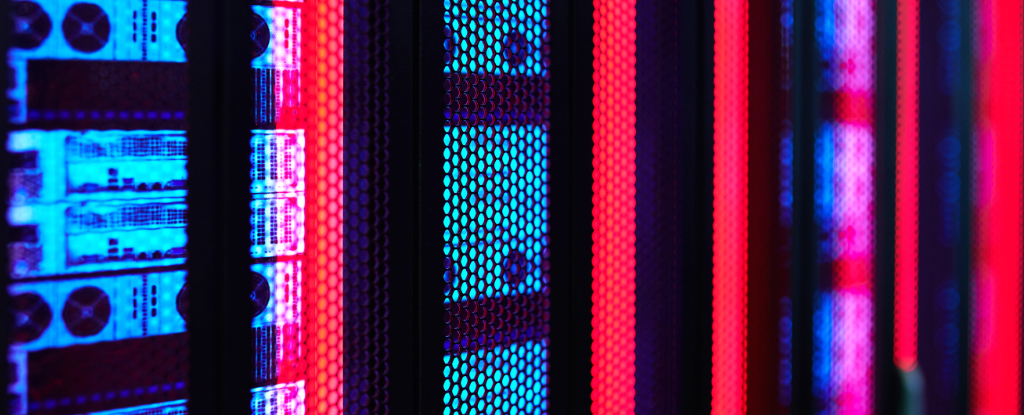

Data center operations are now just 10% of total server energy, down from equal shares 20 years ago. AI sensors manage cooling more precisely, saving water and power, McKinsey’s Pankaj Sachdeva says.

Liquid cooling is the biggest breakthrough. Instead of noisy air conditioners, coolants run through servers directly. AWS recently launched its own liquid cooling for Nvidia GPUs to avoid costly data center rebuilds.

Amazon’s Dave Brown said on YouTube:

"There simply wouldn’t be enough liquid-cooling capacity to support our scale."

Purdue’s Yi Ding points out bigger efficiency gains slow chip replacement, which hurts semiconductor sales.

Energy use will still climb, Ding predicts, just not as fast.

The U.S. counts energy as key to beating China in AI.

Chinese startup DeepSeek built an AI model matching U.S. systems using less powerful chips and skipping energy-heavy training steps.

China also leads in energy supply, especially renewables and nuclear—a big edge in AI race.