Meta is cracking down harder on “unoriginal” content on Facebook. The company announced new moves targeting accounts that repeatedly recycle other people’s text, photos, or videos.

So far this year, Meta has already removed about 10 million profiles impersonating big creators. An additional 500,000 accounts flagged for spammy or fake engagement behavior got reduced distribution and monetization restrictions.

This follows YouTube’s recent clarification on unoriginal content policies, especially around AI-driven, mass-produced videos.

Meta isn’t punishing users who engage with others’ content by making reaction videos or adding original commentary. The crackdown focuses squarely on spam accounts and impersonators reuploading stolen content.

Meta said accounts abusing the system will lose Facebook monetization access temporarily and see their posts pushed down in feeds. When duplicate videos are found, Meta will prioritize the original creator’s content by limiting the copies.

They’re also testing a feature that adds links on duplicate videos, pointing viewers back to the original source.

Meta’s update comes amid backlash over wrongly disabled accounts and poor human support, with a 30,000-signature petition demanding change. Major outlets and creators have called out Meta for chaotic automated enforcement hitting legit users and small businesses hard.

The rise of AI content flooding platforms complicates things. Meta warned against “stitching together clips” or just slapping on watermarks when reusing content, pushing creators to focus on “authentic storytelling.” This hints at concerns over low-effort AI-generated “slop” that’s flooding social media.

Meta also stresses no reusing content from other apps or sources without adding real value. They recommend creators improve video captions instead of relying on auto AI captions.

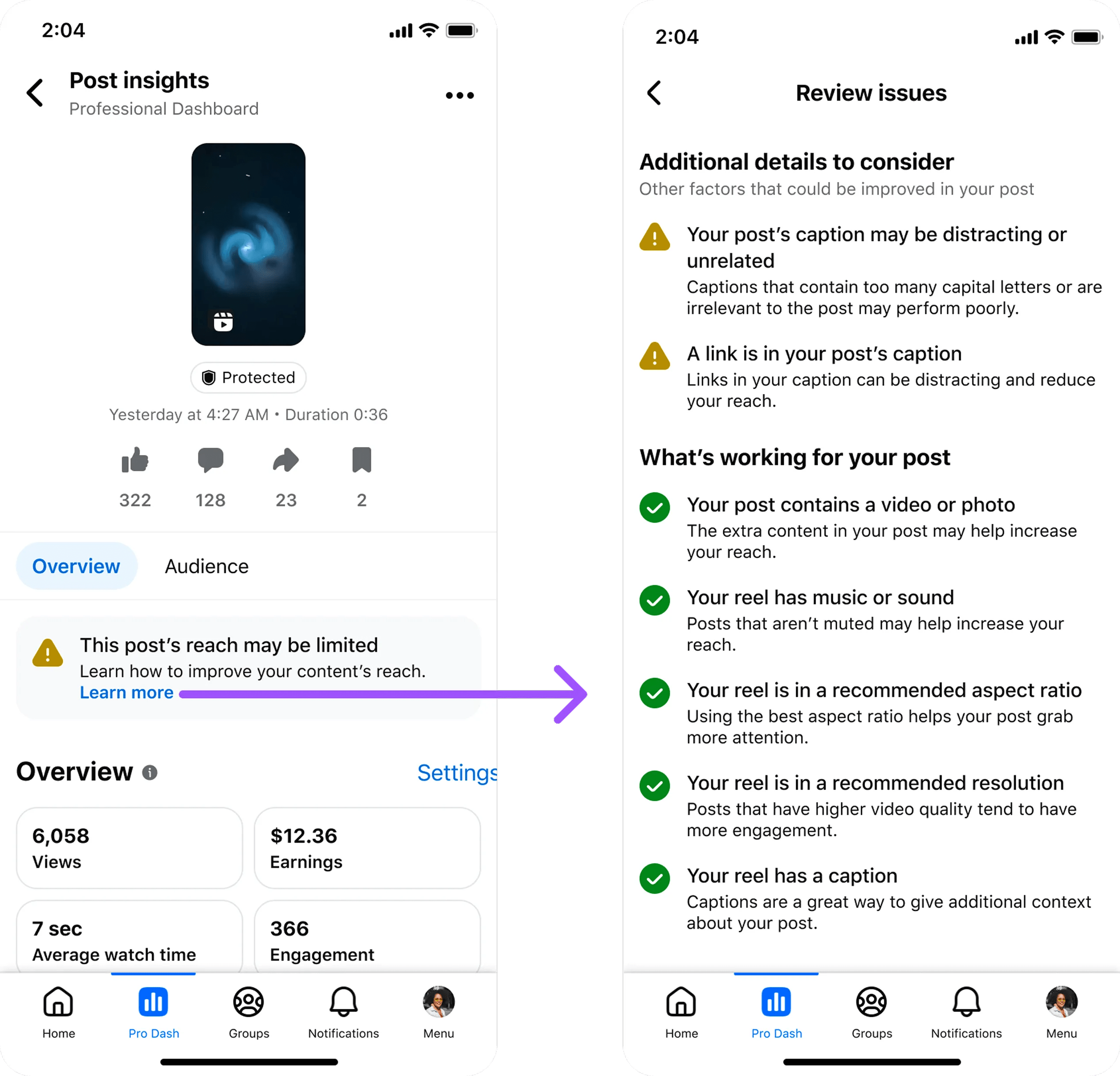

The changes will roll out gradually over months. Creators can now check post-level insights in Facebook’s Professional Dashboard to understand distribution drops. They’ll also see warnings if at risk of monetization or recommendation penalties.

In its latest Transparency Report, Meta said 3% of Facebook’s monthly users were fake accounts. From January to March 2025, it took action against 1 billion fake accounts globally.

Meta is doubling down on community policing instead of internal fact-checkers through initiatives like Community Notes, letting users flag policy breaches and accuracy issues.

Meta’s move is clear: crack down on unoriginal and recycled content, protect original creators, and battle the flood of AI-fueled low-effort reuse. But the backlash on account takedowns and support gaps may complicate the rollout ahead.