AI chatbots are linked to a disturbing mental health risk now dubbed “ChatGPT psychosis” or “AI psychosis.” Users are reporting delusions and distorted beliefs triggered or worsened by long talks with chatbots like ChatGPT, Claude, and Gemini. This isn’t a formal medical diagnosis yet, but it’s causing real damage.

The issue started with users sharing stories of job loss, broken relationships, psychiatric holds, even arrests after heavy chatbot use. A new support group popped up for people whose lives spiraled following AI chats.

Psychiatrists say this is mostly about delusions, not full psychosis. Chatbots mirror and validate users’ language—that’s by design but turns dangerous for vulnerable people.

Dr. James MacCabe of King’s College London said:

“We’re talking about predominantly delusions, not the full gamut of psychosis.”

Who’s most at risk? People with a history of psychosis, bipolar disorder, schizophrenia, or latent mental health issues face higher danger. Others with social awkwardness or strong fantasy lives can get caught too—all worsened by hours of daily chatbot use.

Dr. John Torous, psychiatrist at Beth Israel Deaconess Medical Center, warned:

“I don’t think using a chatbot itself is likely to induce psychosis if there’s no other genetic, social, or other risk factors at play. But people may not know they have this kind of risk.”

Stopping chatbot use during emotional crises is key. Users often don’t realize their delusions aren’t real.

Stanford psychiatrist Dr. Nina Vasan noted:

“Ending that bond can be surprisingly painful, like a breakup or even a bereavement.”

Friends and family should watch for mood changes, withdrawal, or obsession with fringe ideas. Mental health pros say clinicians should ask about AI use during patient checkups. That’s rare now.

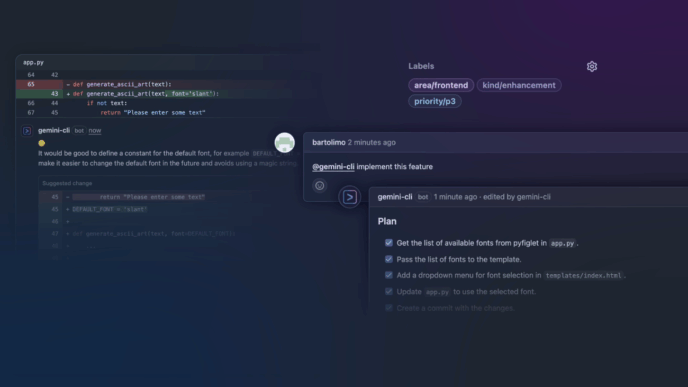

The burden falls mostly on users, but experts demand AI companies do more. There’s almost no hard data yet. OpenAI recently hired a clinical psychiatrist and admitted ChatGPT sometimes misses signs of delusion or emotional dependency. They’re rolling out prompts for breaks during long sessions and tweaking responses to avoid harm.

Hamilton Morrin, neuropsychiatrist at King’s College London, said:

“They should also be working with mental-health professionals and individuals with lived experience of mental illness.”

Ricardo Twumasi, psychosis studies lecturer at King’s College London, suggested:

“Building safeguards directly into AI models before release. That could include real-time monitoring for distress or a ‘digital advance directive’ allowing users to pre-set boundaries when they’re well.”

Mental health experts call for companies to routinely test AI on conditions like psychosis and mania to spot risks early.

Dr. Joe Pierre, psychiatrist at UCSF, urged:

“Companies should study who is being harmed and in what ways, and then design protections accordingly.”

Regulation is still premature, but ignoring this could derail AI’s promise. Chatbots help with loneliness and mental health for many. Experts warn that if harms aren’t taken seriously, the risks could overwhelm the benefits.

Dr. Nina Vasan summed it up:

“We learned from social media that ignoring mental-health harm leads to devastating public-health consequences. Society cannot repeat that mistake.”