Researchers are pushing for new, improved ways to test AI systems.

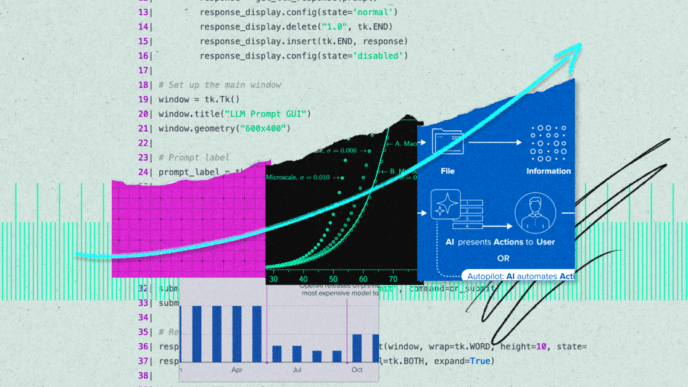

Current AI testing methods often fall short, missing critical errors and risks. The issue started as models grow more complex and impactful. Traditional benchmarks can’t keep up.

Now, teams are rolling out fresh approaches to catch AI flaws early. These methods aim to go beyond just testing performance, digging into safety, ethics, and real-world reliability.

The push follows rising concerns over unchecked AI behavior and failures. Researchers want tests that can spot biases, hallucinations, security issues, and more.

The work is ongoing and evolving fast—no one-size-fits-all fix yet. But the demand for smarter AI testing tools is clear: safer, more trustworthy AI depends on it.