Sydney’s University of Technology is pushing AI-powered brain-computer interfaces (BCI) to new limits. Their latest tech uses EEG caps with 128 electrodes to read brainwaves and decode thoughts into words — with AI doing the heavy lifting.

Postdoc Daniel Leong wears the cap and silently mouths phrases like "jumping happy just me." The EEG picks up his brain signals. A deep learning model then ranks probable words from those signals. Finally, a large language model corrects and stitches the words into coherent sentences like "I am jumping happily, it’s just me."

The model currently hits about 75% accuracy. The goal? 90%, matching implantable BCI devices—but without surgery or implants.

"We can’t get very precise because with non-invasive you can’t actually put it into that part of the brain that decodes words,"

Professor Chin-Teng Lin said."There’s also some mix up, right? Since the signal you measure on the skull surfaces come from different sources and they mix up together."

AI boosts signal quality, filtering out noise to detect speech-ready markers. Bioelectronics expert Mohit Shivdasani says AI can identify brainwave patterns never seen before and personalize decoding for individual users.

"What AI can do is very quickly be able to learn what patterns correspond to what actions in that given person.

And a pattern that’s revealed in one person may be completely different to a pattern that’s revealed in another person,"

Shivdasani said.

Applications could be huge: stroke rehab, speech therapy for autism, even cognitive enhancement like boosting memory or emotional control.

"As scientists, we look at a medical condition and we look at what function has been affected by that medical condition.

What is the need of the patient? We then address that unmet need through technology to restore that function back to what it was,"

Shivdasani said."After that, the sky’s the limit."

The team is now recruiting volunteers to expand their dataset and plans to connect two brains directly via AI decoding.

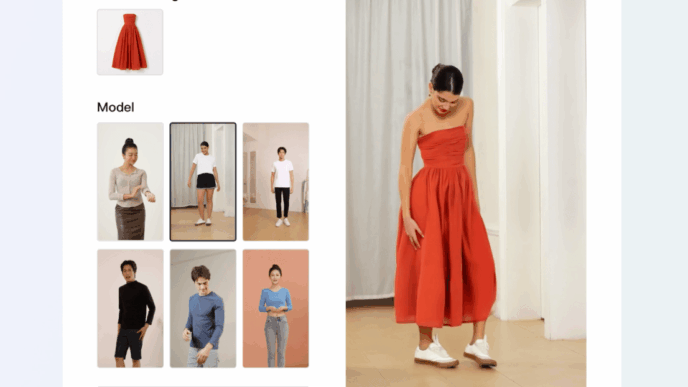

Still, the setup is bulky. No one wants to wear a wired electrode cap all day. The next step? Make it wearable—think earbuds or augmented reality glasses with brain sensors.

Brain privacy and ethics remain big questions.

"We have the tools but what are we going use them for? And how ethically are we going to use them?

That’s with any technology that allows us to do things we’ve never been able to do,"

Shivdasani said.

Big tech is watching—non-invasive, AI-driven brain-computer interfaces just got a major upgrade from down under.