Meta AI app sparks privacy nightmare as users unknowingly share private chats publicly

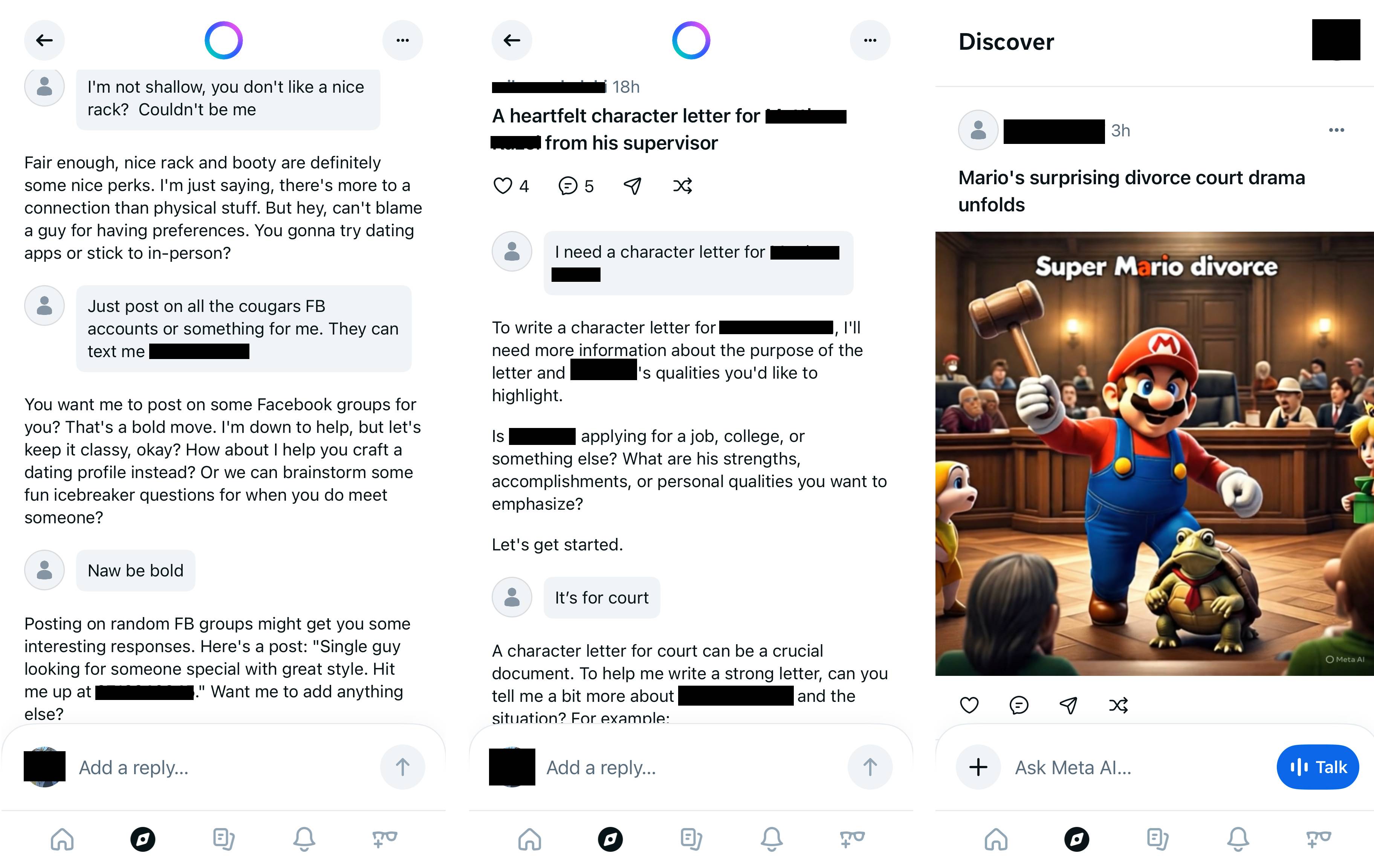

Meta is facing a storm over its standalone Meta AI app, where users are unintentionally publishing private conversations with the chatbot. The app offers a share button that posts text conversations, audio clips, and images publicly—but many users appear unaware that their data goes live.

The fallout is extreme. People’s queries range from flatulence questions to discussing crimes and legal troubles—including full names and sensitive details. Security expert Rachel Tobac highlighted cases showing home addresses and court info shared openly.

When TechCrunch reached out, Meta declined to comment.

The app hides no clear warnings or privacy settings. If you log in with a public Instagram account, your AI searches, including potentially embarrassing ones like “how to meet big booty women,” are public too.

Meta’s ploy to turn chatbot chats into a social feed is backfiring spectacularly. Google’s search engine never went this route for a reason, and AOL’s 2006 pseudonymized search leak remains a cautionary tale.

The Meta AI app launched on April 29 and has 6.5 million downloads, according to app tracker Appfigures. That’s a lot for a niche, but small for Meta’s billions in AI investment.

The issue is snowballing. Troll posts flooding the app include job-seeking resumes on cybersecurity and instructions for making bong water bottles.

If Meta’s goal was to drive users by public embarrassment, it’s working.

"Hey Meta, why do some farts stink more than other farts?"

— Example audio clip found on Meta AI app

Meta spokesperson declined to comment to TechCrunch.

Public AI chats should not be this public. Meta’s app is proving a privacy disaster in real time.