Amsterdam’s Smart Check AI system aimed to cut wrongful welfare investigations but risks overreach

Amsterdam rolled out Smart Check, an AI system designed to replace caseworkers who flagged suspicious welfare applications. The goal: reduce false investigations and save €2.4 million a year by better spotting real errors.

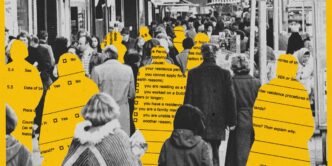

The system scores applicants on 15 factors like past benefits, asset sums, and address count. It avoids sensitive demographics like gender, nationality, and postal codes — aiming to cut bias and avoid “proxy” factors linked to ethnicity.

The city trained Smart Check on 3,400 past investigations, hoping it would flag fewer applicants for scrutiny but catch more actual errors. Officials projected it could prevent 125 people from falling into debt due to mistaken fraud suspicions.

Data scientist Loek Berkers, who worked on Smart Check, called it a “very much a sort of innovative project” for a municipal program.

But the stakes are high. Caseworkers now can delay payments, demand bank records, and make surprise home visits. Over half of investigations currently find no wrongdoing — causing what city official Bodaar calls “wrongly harassed” residents.

Smart Check uses an “explainable boosting machine” model to help users understand decisions, unlike typical AI black boxes.

The city openly shared the model and multiple versions for outside review—a rare move inviting scrutiny of how welfare applicants get evaluated.

Project lead de Koning said they’re “taking a scientific approach” to see if the system “was going to work,” rather than pushing it no matter what.

“Especially for a project within the municipality,”

Loek Berkers said,

“it was very much a sort of innovative project that was trying something new.”

Officials hope Smart Check stops wrongly delayed benefits and wrongful debt, but it must prove it can do better than humans without unjustly targeting vulnerable people.