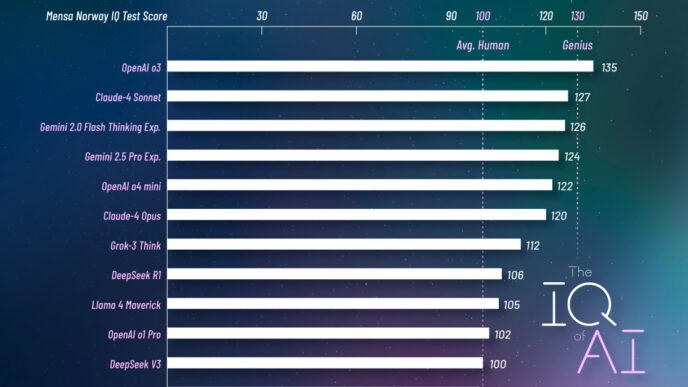

Apple AI researchers say don’t expect AGI next year. They tested “large reasoning models” (LRMs) like OpenAI’s o1/o3 and Anthropic’s Claude 3.7 under task complexity ramps. The results: performance falls apart when problems get tricky.

LRMs work by breaking problems into steps, using things like Chain of Thought to validate each stage. Apple’s researchers built a puzzle environment – including Tower of Hanoi – to avoid training data leaks and benchmark gaming. The puzzles let them control how complex each test got.

The takeaway: these models do okay on medium-complexity tasks but utterly fail beyond certain complexity points. They even underperformed simpler large language models on easier problems by wasting compute on needless extra steps.

Lead author Parshin Shojaee and team wrote in their paper:

“[D]espite their sophisticated self-reflection mechanisms learned through reinforcement learning, these models fail to develop generalizable problem-solving capabilities for planning tasks, with performance collapsing to zero beyond a certain complexity threshold,”

They add:

“These insights challenge prevailing assumptions about LRM capabilities and suggest that current approaches may be encountering fundamental barriers to generalizable reasoning.”

Apple’s research puts a serious dent in optimism that LRMs can deliver human-level reasoning anytime soon. The idea that stepping up LRMs leads directly to AGI faces new doubts.

Researchers’ full findings are in their paper.