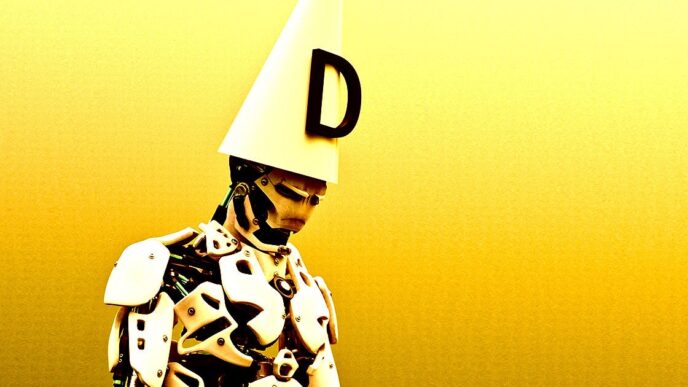

Apple researchers just dropped a bomb on advanced AI. Their new paper finds serious flaws in large reasoning models (LRMs), a cutting-edge AI tech designed to crack complex problems by breaking them down into steps.

The study tested LRMs on tough puzzles like Tower of Hanoi and River Crossing. Results? Standard AI beat LRMs in simple tasks. Both crashed hard on anything very complex. LRMs even slowed down their reasoning as problems got harder, which Apple called “particularly concerning.”

The collapse was total. At high complexity, some models failed to find any correct answers — even when given the solution steps upfront. The researchers say this points to a “fundamental scaling limitation” in current reasoning AI.

The paper tested big names: OpenAI’s o3, Google’s Gemini Thinking, Anthropic’s Claude 3.7 Sonnet-Thinking, and DeepSeek-R1. OpenAI refused to comment. Anthropic, Google, and DeepSeek were asked for reaction.

AI skeptic Gary Marcus called the paper “pretty devastating.” He warned this challenges the hype around large language models (LLMs) as a path to artificial general intelligence (AGI).

“Anybody who thinks LLMs are a direct route to the sort [of] AGI that could fundamentally transform society for the good is kidding themselves.”

Gary Marcus

Andrew Rogoyski from the University of Surrey says the findings hint the AI field is “still feeling its way” and may have hit a dead end with current approaches.

“The finding that large reason models lose the plot on complex problems, while performing well on medium- and low-complexity problems implies that we’re in a potential cul-de-sac in current approaches.”

Andrew Rogoyski

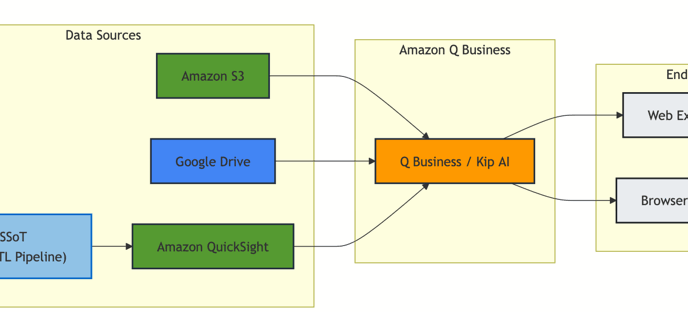

Apple’s paper says current AI models waste effort solving simple problems early, but hit a wall on generalizable reasoning — the ability to apply solutions broadly. This casts doubt on the whole race to build smarter, more capable AI.