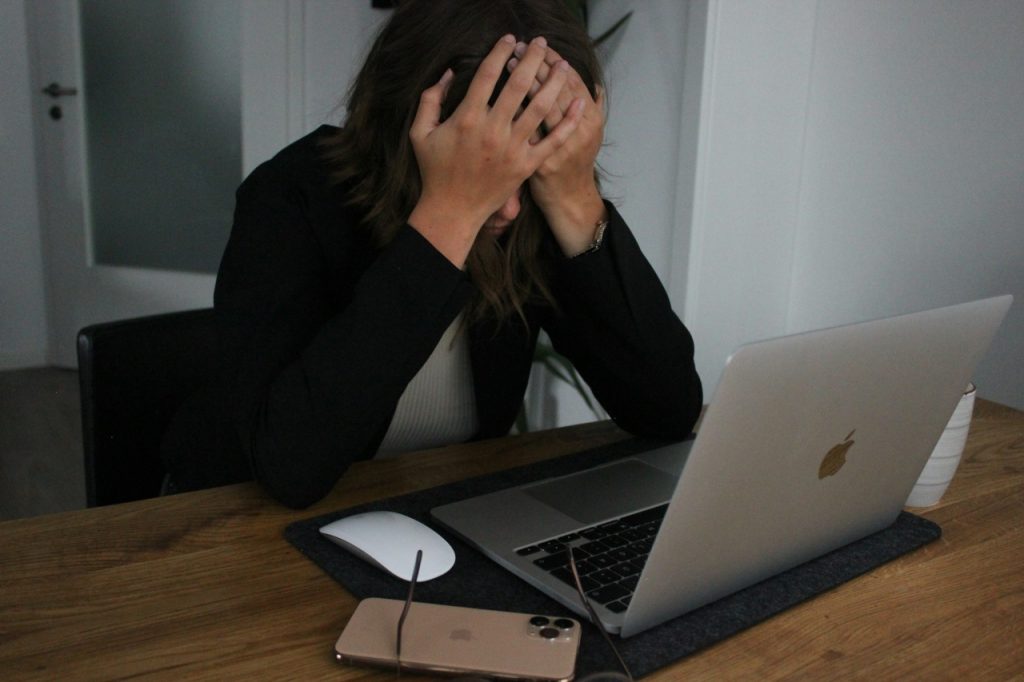

GPT can’t replace data infrastructure for smooth operational reports. A business operator spent 20+ hours testing if GPT could generate consistent, multi-location QSR operational reports straight from raw data. Spoiler: it failed.

The issue started with messy, unstructured inputs. GPT struggled to make sense of inconsistent formats, column names, and missing context. The AI needed line-by-line normalization before it could generate anything coherent.

Soon after, repeated sessions required re-explaining the setup. Outputs varied wildly even with fine-tuned prompts. GPT’s memory limitations killed any hope for continuity or reliable reporting.

The manual work piled up: recalculating summaries, checking formulas, rewriting prompts. The whole process became more tedious than automation was supposed to fix.

The launch follows a hard lesson: AI alone can’t fix dirty data or replace a proper data orchestration system.

Here’s what the experiment taught the operator:

“Clean data is non-negotiable: No AI can fix disorganized inputs. Structured, normalized data is the prerequisite for consistent outputs.”

“Context windows are fragile: GPT’s memory isn’t persistent. You’re not feeding a system — you’re explaining things from scratch, over and over.”

“Prompt engineering isn’t a strategy: Manually crafting multi-step instructions just to simulate basic reporting isn’t scalable—or efficient.”

The operator’s takeaway: don’t dump raw spreadsheets into AI expecting magic. Build solid data systems first. Then AI can accelerate decisions instead of adding friction.

If you think AI will fix your day-to-day data headaches—think again.

Restaurant operators should start with data, not AI. Only then can AI deliver on its promised automation boost.

The operator is open to chat about making operations smarter—and saving weekends.

No shortcuts here. Just cold, hard data prep.